Avoid AI Tutors!

Since the beginning of LLMs doing math we read about students using them to cheat on the homeworks. And yes, LLM solutions would by now pass any undergraduate course in math. Of course this is frowned upon because the students rob themselves of the learning opportunity etc. In this discussion some math educators advocate for smarter use of LLMs. Instead of letting the LLMs do the homework, students should use them as tutors.

In this post I want to give one example of the shortcomings of an gpt-5 as a tutor. By nature this is anecdotal. I’ve not systematically studied this. Rather I present the first example I tried. But I think it is interesting nevertheless, given that Altman described gpt-5 as “a handful of PhDs in your pocket”.

I start with a very simple observation. I computed the remainders of cubed integers modulo 9:

\[1^3 \equiv 1 \mod 9, \qquad 2^3 \equiv 8 \mod 9, \qquad 3^3 \equiv 0 \mod 9,\] \[4^3 \equiv 1 \mod 9, \qquad 5^3 \equiv 8 \mod 9, \qquad 6^3 \equiv 0 \mod 9,\] \[7^3 \equiv 1 \mod 9, \qquad 8^3 \equiv 8 \mod 9, \qquad 9^3 \equiv 0 \mod 9,\]and so on. I observe a pattern, the remainders go $1,8,0,1,8,0, \dotsc$. My simple task here is to find out why that is. And this is crucial. Mathematics is not only about theorems and their proofs, it is about understanding and developing a language for patterns. It is about making things visible that have been invisible. That is why the good old why? is so difficult.

I will start with my human attempt to explain this pattern, as if a student or my child had asked me this. First of all, when thinking about this, I make associations. I try to gather in my mind facts about numbers that might be relevant. I think, every number has a unique prime factorization and I think taking “mod 9” can be interchanged with multiplication. There is a concrete statement to this: $n^3 \equiv (n \mod 9)^3 \mod 9$. This means that a priori there are only 9 integers to consider! A reduction from infinite to finite! And combining with prime factorization, I can immediately explain why every integer divisible by 3 gives a remainder of zero. That’s because $3^3$ is divisible by 9 and so $n^3$ is divisble by 9 if $n$ is divisible by $3$.

Now, having dealt with numbers divisble by 3, I might come up with the idea to consider remainders mod 3! And, low and behold, $1^3 = 1$ and $2^3 = 8$. So the remainder of the cube $n^3$ mod 9 only depends on the remainer of $n$ mod 3.

That is my chain of thought. In a real situation I want to document that chain of thought. I want the student to think similarly, make the same attempts, mimic the process. And this does not stop here. The next steps in mathematical thinking would be:

- How can I generalize this? What does the sequence $n^k \mod m$ look like for different $n,k,m$ ?

- This surely must have been considered hundreds if not thousands of years ago.

Let’s skip the first question. I encourage students to follow up. Do experiments, make observations, generalize arguments, play around.

The second point is crucial though. We stand on the shoulders of giants. There is always some previous math connected to whatever one studies. For me, Quadratic residues come to mind. As the teacher I have this background knowledge and I would hand out this link. I also think (and this I did not know for sure) that there might be a theory of cubic residues (more relevant here). Searching on Wikipedia I found a theory of cubic reciprocity. The student would not know what this “reciprocity” is, but we smell it is what people in this area study. This concept must be important to answer many and more general questions like. On Wikipedia I also see several references to a textbook Reciprocity Laws: from Euler to Eisenstein by Lemmermeyer and I can download this book for free from the university library. And so my journey begins. Or maybe I stop, because I really have only a 20 minute budget for number theory today, and that is fine too. The time was well spent.

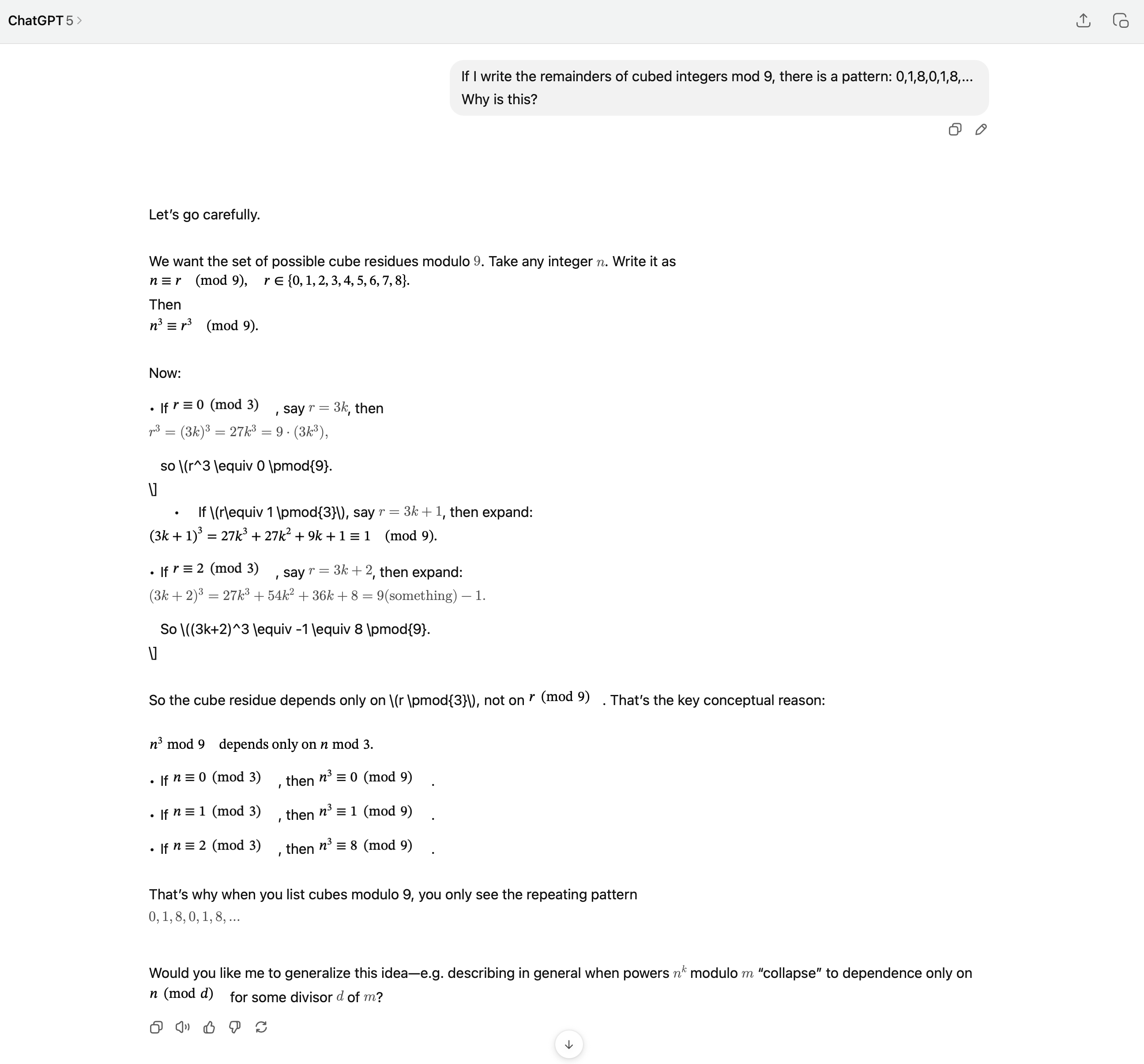

Now let’s see what the AI tutor (gpt-5 as of Sep 9, 2025) has to say to this!

I pretend to be a curious student and ask gpt-5 (in the app with an active Pro account, to be specific). You can read the chat here (until openai deletes it) or in the following screenshots:

First, there are some display errors with the LaTeX, but I will ignore those. I can still read what’s there or I can ask again and maybe next time it will display fine. I also don’t know why the AI tells me “Let’s go carefully”. What does this even mean? The AI output is noisy. Somehow it’s never short and direct.

The first statement is what I also stated above. The problem reduces to only 9 cases, but it does so without any justification. That $n^3 \equiv r^3 \mod 9$ holds comes out of the blue with no argument. That seems pretty bad in the educational context.

The following paragraph jumps directly to a computational proof, that the remainder mod 9 depends on the remainder mod 3 (without stating this fact or any kind of argument plan first). The computations are sound, though and they would need to appear in a proof. But again, grading this, I would complain that the argument is missing. The LLM freely exchanges taking remainders and addition/multiplication. Why?

Then it states that this is the key conceptual reason. But is it? Actually, this answer just states my pattern again. It ‘explained’ the solution by doing all the calculations at once. I now have certainty that this pattern persists. This is important to have, but I had this certainty already because I knew before that only 9 numbers need to be considered anyway.

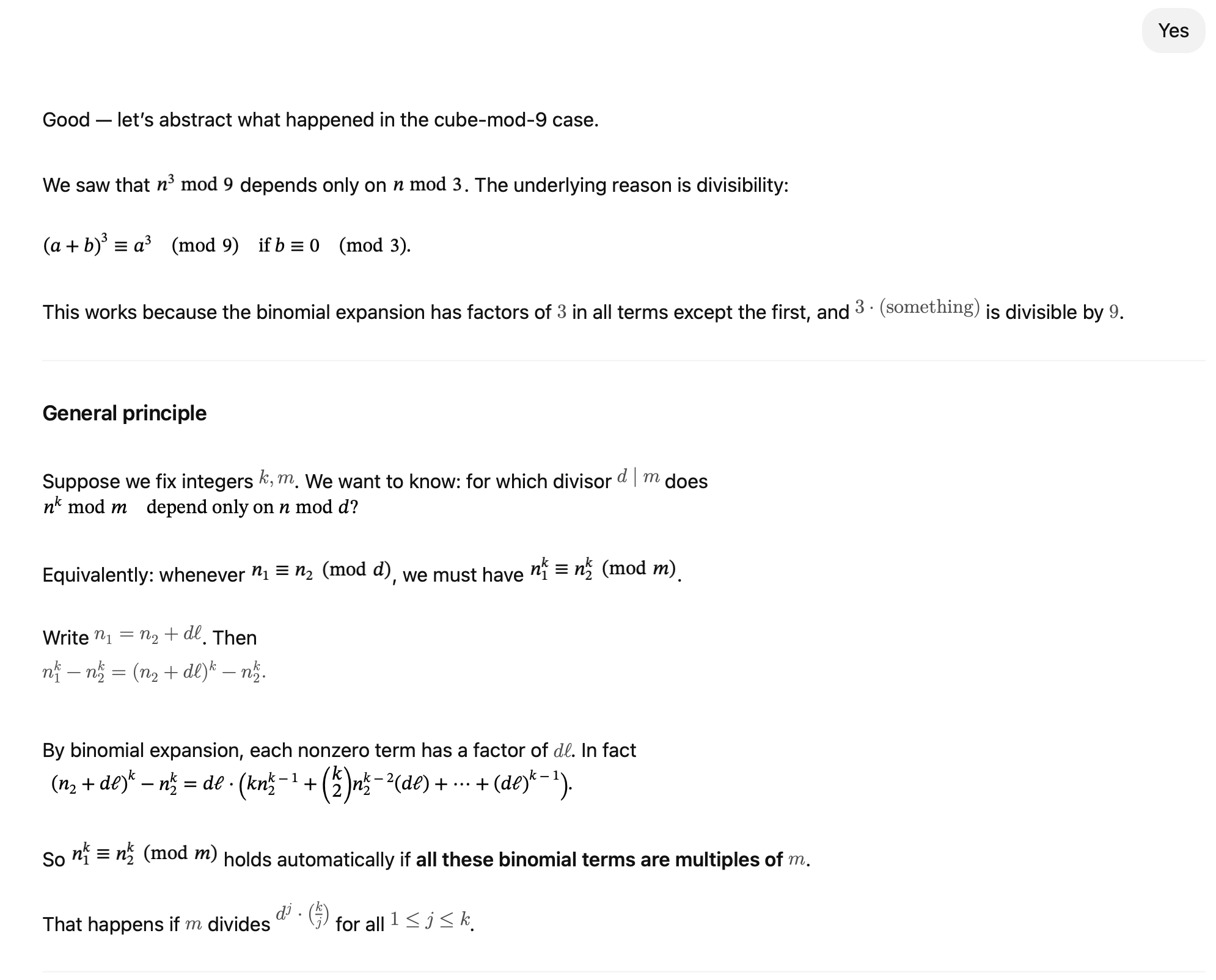

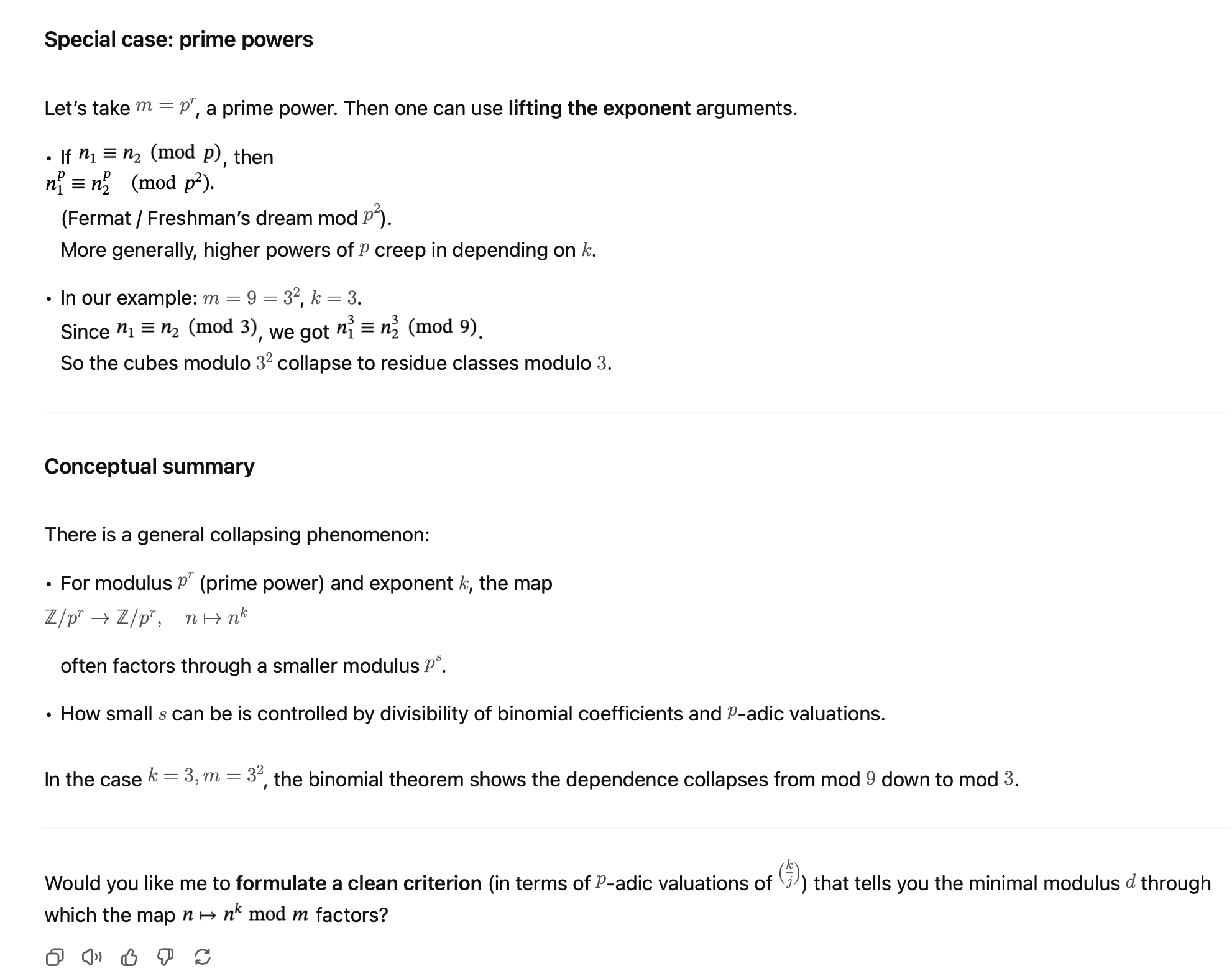

Finally it offers to describe a generalization. I look at the generalization, but it generalizes only the computation and then offers a conceptual summary that is clearly beyond me. Why is this called a collapsing phenomenon? What are these $\mathbb{Z}/p^r$ things? What does “factor through” mean? What are $p$-adic valuations and how do they control this collapse?

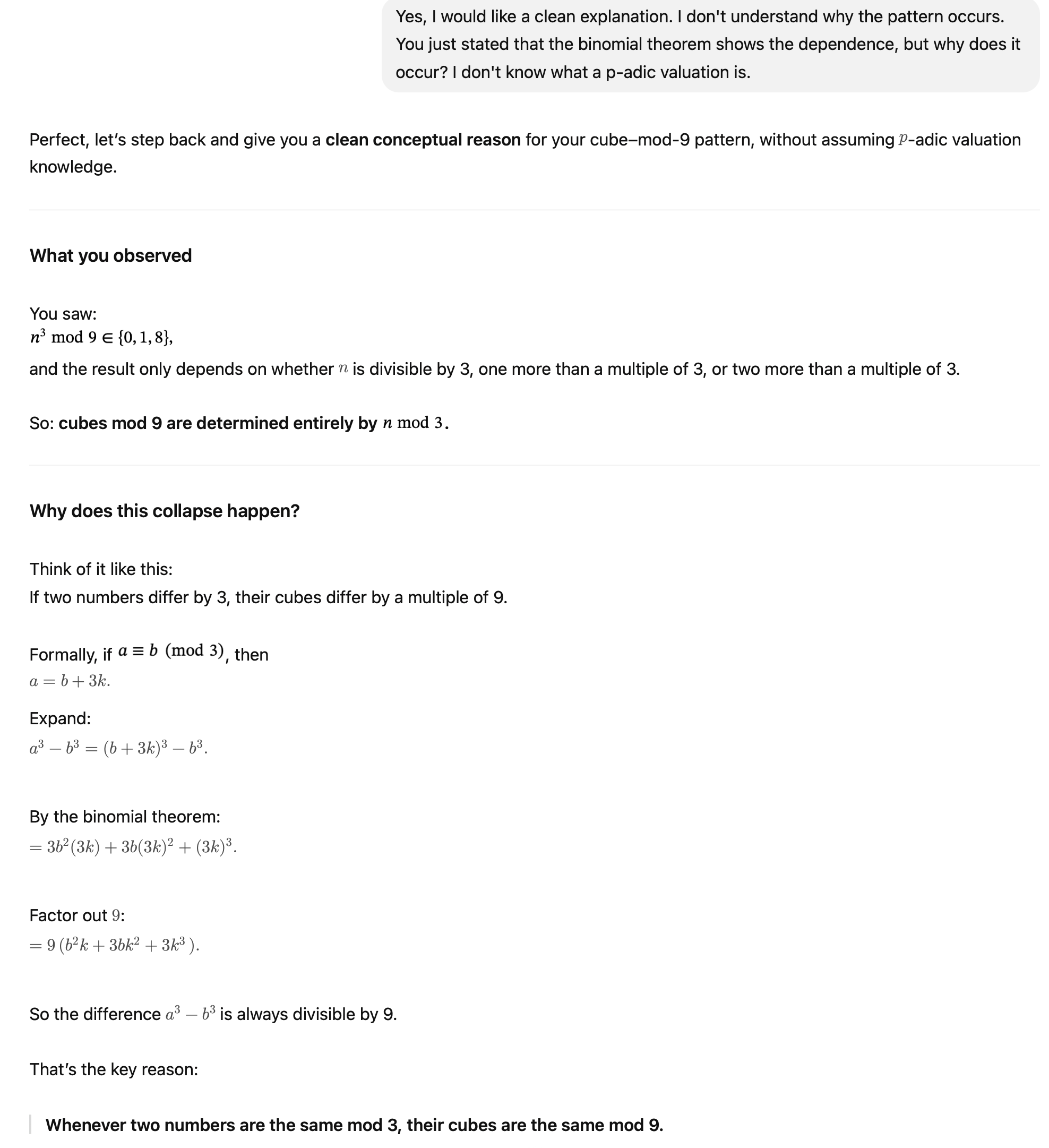

I’m asking for the offered “clean” answer with no p-adic valuations. The result is just more of the same. It is giving me the computation again, but in less technical language. What I find particularly irritating is that it is now explaining why it came up with the word “collapses”.

This is entirely contrary to how we write and talk in mathematics. A “clean” explanation can only be in terms of mathematical statements. This talking around the subject, taking different views, “explaining” (in fact only stating!) things 3 times with different words. This is what students who have not understood a subject use as a math avoidance strategy.

We teach the students to NOT talk like this!

Finally, after a long text we get a short answer that is computational, but does serve as some sort of explanation of the phenomenon. What we don’t get is a learning process. At no point does the AI help me to dig deeper or consider context. It has not suggested to look into reciprocity, or more general knowledge or sources. It just pulls some answer out of the hat.

A good mathematics teacher never only answers a question. A good teacher tries to understand the mental model of the person asking the question and work on that model, that understanding. Quite generally, the solutions to exercises, the scratchpaper and blackboards full of math have no meaning in themselves. They are only there to communicate mental models of humans. And it is absurd to think that this communication between humans could persist in the noisy representation of math texts in LLMs. In mathematics education we use problems, writing, solutions to communicate ideas, to communicate things that are invisible and for which we have no previous shared experience.

When humans talk about tomatoes, say, we talk in the context of a shared experience, we can compare our experiences, etc. When we talk about math, the experience is much less shared and much more individual. Or maybe it is not shared at all. If I want to teach some new mathematics, I’m describing something that was truly invisible and I’m invoking it for the first time in the mind of the listener. Learning mathematics is seeing things for the first time, all the time. Then playing around with them in your head. This is the part you have to do yourself. This is studying.

Inducing the ability to study requires to have gone through the thought processes yourself. It also requires experience with teaching. What thoughts do the students think when trying to solve problems. What difficulties do they report? What questions do they ask? The statistical answer machines have very little to offer for math education and are not suited as automated tutors. Their usefulness for this is even less than as the homework-cheating-machines.